RTPEngine as you can find in the project GitHub too, is a proxy server for RTP and other UDP-based media traffic, and it is used with projects like Kamailio or OpenSIPS as a SIP proxy server. So, the SIP proxy handles SIP signaling, and RTPEngine takes care of the RTP/Media plane.

In this post, I am not going to show you how we can install or configure RTPEngine. I would like to share some ideas that I found from its source code about RTPEngine’s internal design and packet handling flow in it.

But why I tried to understand the flow? First of all, it helps me to contribute new features to it, because all of us as open-source users, should be committed to the projects that we use and its community! Also, sometimes I spend time getting some ideas about project code to debug issues better. About RTPEngine, there was a third reason; I needed to change the RTP packets’ flow based on some requirements.

Note: my findings are just experimental and might make project owners a little shocked :)

How is RTPEngine internal?

RTPEngine project includes various folders but the main sources are under daemon folder. In this folder, you can find the implementation of different project elements or features. In this folder, there are two important files that I found core functions of RTPEngine in them: call.c and media_socket.c. Obviously, other files are important, but if you are following the main RTP flow in RTPEngine, we need to focus on these files. Let’s see how we reach these files.

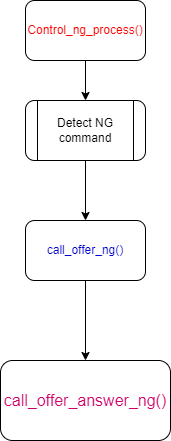

RTPEngine gets action commands like offer, and answer through ng protocol; it is a control method that let you control the RTPEngine daemon from a SIP proxy or any other entity that controls RTPEngine functionality. You can configure ng port and protocol in RTPEngine configs. So, there is control_ng.c that in this file you can find a function named control_ng_process(...). This function will be called whenever RTPEngine receives a command through ng port. In this function, RTPEngines detects the command and does proper action. For example, if the command is ‘offer’, this function calls call_offer_ng(...) that is located in call_interfaces.c. Later call_offer_ng calls call_offer_answer_ng(...) which I think is an important function that lets RTEngine create required handlers.

So here, we have this flow:

call_offer_answer_ng(...) is the place where we can actually see the RTPEngine packets handling logic creation. This function, create a call entity that is the main parent structure of all call-related objects. Also, it creates call_monologue that exposes a call participant. call_monologue contains a list of subscribers

and subscriptions, which are other call_monologues. These lists are

reciprocal and a regular A/B call would have two call_monologues with

each subscribed to the other. call_monologues includes an object list of call_media that keeps the media attributes and related things. I hope you are not lost!!

call_media also keeps an object list of packet_stream and finally packet_steam is linked to stream_fd. It means object hierarchy under the call structure is:

If we look at this flows from bottom to top, when RTPEngine receives incoming RTP packets, they initially received by a stream_fd which directly links to a packet_stream. Each packet_stream then links to an call_media. There are two packet_stream objects per call_media, one for RTP and one for RTCP. The call_media then links to a call_monologue which corresponds to a participant to a call.

During signalling events, the list of subscriptions for each call_monologue is used to create the list of rtp_sink and rtcp_sink given in each packet_stream. Each entry in these lists is a sink_handler object, which again contains flags and attributes. Flags from a call_subscription are copied into the sink_handler. So, during actual packet handling and forwarding, only the sink_handler objects and the packet_stream objects they related to are used.

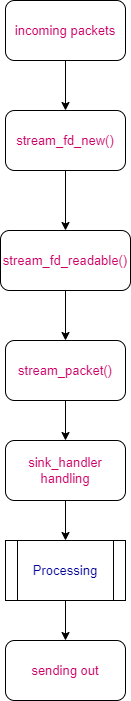

We have this flow:

In another way, packet handling is done in media_socket.c and starts with stream_packet(). This operates on the originating stream_fd and its linked packet_stream and eventually proceeds to go through the list of sinks, either rtp_sinks or rtcp_sinks, and uses the contained sink_handler objects which point to the destination packet_stream.

So, if we need to change RTP packets or trigger other procedures in parallel before sending out the traffic, we can use sink_handler and its attributes sink_attrs that would affect rtp_sinks list.

I need to spend more time to understand how RTPEngine handles SRTP and what is appropriate changes in xt_RTPENGINE for kernel module.